SDK

Quick Start

Learn how to quickly set up and integrate bitHuman SDK into your application.

Quick Start Guide

bitHuman SDK enables you to build interactive agents that respond realistically to audio input. This guide covers installation instructions, a hands-on example, and an overview of the core API features.Installation

System Requirements

Install bithuman

Agent Model Setup

To run the example, you’ll need to obtain the necessary credentials and models from the bitHuman platform. Follow these steps:1

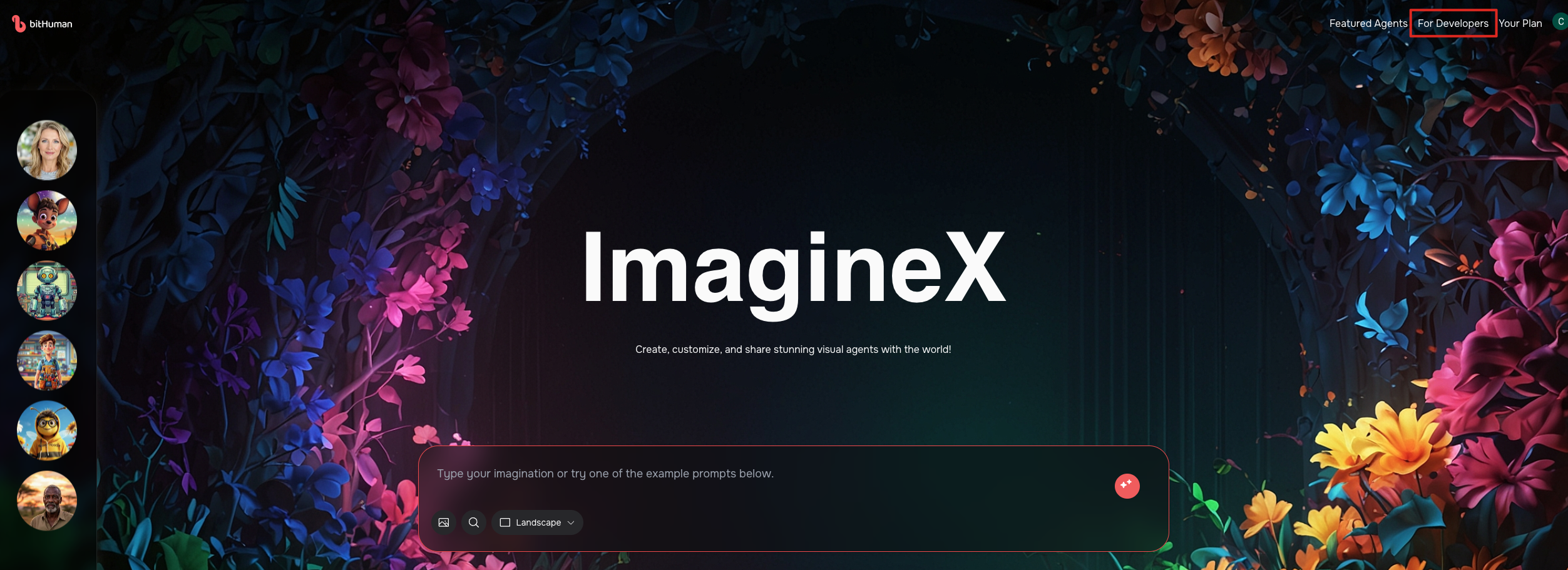

Access Developer Settings

- Login to bitHuman Platform

- Click the “Developer settings” button in the top-right corner of the page

2

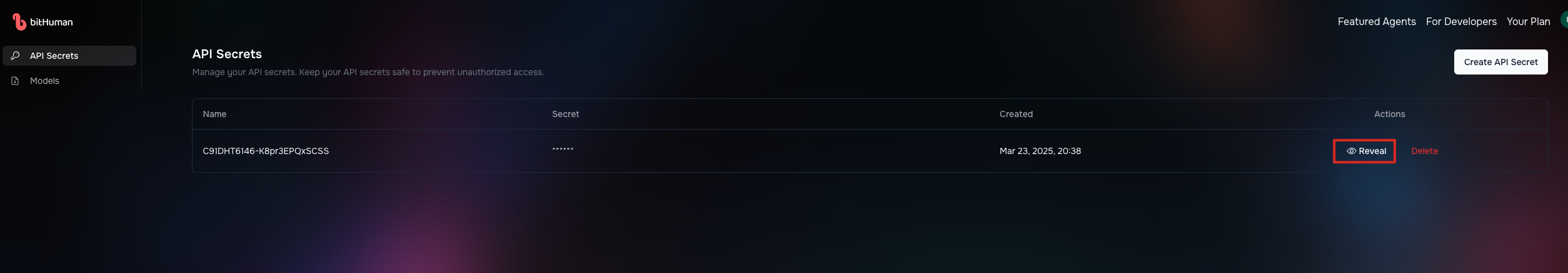

Get API Secret

- In the Developer Settings page, navigate to the “API Secrets” section in the left sidebar

- Click the “Reveal” button to view your API secret

Examples Overview

1. LiveKit Agent

Run a visual agent using bitHuman for visual rendering, OpenAI Realtime API for voice-to-voice and LiveKit for orchestration. Make sure to add OPENAI_API_KEY for voice response, and to run a LiveKit room with webrtc, add LIVEKIT_API_KEY to your .env file.2. LiveKit WebRTC Integration

Stream a bitHuman avatar to a LiveKit room using WebRTC, while controlling the avatar’s speech through a WebSocket interface.3. Avatar Echo

Basic example that captures audio from your microphone, processes it with the bitHuman SDK, and displays the animated avatar in a local window.4. FastRTC

Run a LiveKit agent with FastRTC WebRTC implementation:How It Works

This example covers the following steps:- Initializing bitHuman SDK using your API token

- Configuring audio and video playback

- Processing audio input and rendering the agent’s reactions

- Managing user interactions

API Overview

bitHuman SDK offers a straightforward yet powerful API for creating interactive agents.Core API Components

AsyncBithuman

Main class to interact with bitHuman services.

- You can authenticate using an API secret

- Load and manage models

- Process audio input to animate the agent

- Interrupt ongoing speech using

runtime.interrupt()

AudioChunk

Represents audio data for processing.

- Supports 16kHz, mono, int16 audio format

- Compatible with bytes and numpy arrays

- Provides utilities for duration and format conversions

VideoFrame

Represents visual output data from sdk.

- Contains image data in BGR format (numpy array)

- Includes synchronized audio chunks

- Provides frame metadata such as frame index and message ID

Input/Output Flow

Input

- Send audio data (16kHz, mono, int16 format) into the sdk

- SDK processes this input to generate corresponding agent animations

Processing

- SDK analyzes audio to produce natural character movements and expressions

- Frames generated at 25 FPS synchronized with audio playback

Output

- Each frame includes visual data (BGR image) and corresponding audio chunk

- Demonstrates real-time frame rendering and audio playback